When training your statistical model to predict something, we never do so without our hold out data - our test data. This allows us to review the performance of our model against data that was not used during training, but for which we still know the outcome. However, the method through which you choose to divide your data in training and testing data can sometimes introduce bias and skew your predictive prowess. Were you to simply divide the data in half, you might have an uneven distribution of the population. For instance, you may have the data ordered by total sales. Splitting this in half might mean you trained your model on an unevenly heavy-handed sales total.

To that end, we avoid ordering our data on the SQL Server side before moving it to our statistical software of choice (it better be R). However, this still isn't enough. Depending how your table is constructed, or was populated, it may be naturally ordered in some way that will create some sort of population bias. Tables with clustered indexes are great, but it also means your data is pre-ordered by that clustering key. That key might be negatively influencing the distribution. Never select the top 50% either - the data in the table is likely ordered in the table itself - you may still be getting biased sets.

To avoid this biased data selection, we introduce random number generation to our prepared data, and then split it. I show here the method for random number generation in SQL Server, and the method for doing so in R. While an analyst would quickly hop to the R version, which is incredibly easy, the SQL Server developer who wants to automate processes and data preparations, wants to be sure this kind of information is already stored in the database before the analyst or automated procedures need it, reducing the future workload for all involved.

Generating Random Numbers in SQL Server

Create the following view

create view [dbo].[v_getRandomNum]

as

select

Random_ZeroOrOne = abs(cast(newid() as binary(6)) %2) -- between 0 and 1

, Random_Num = abs(cast(newid() as binary(6)) %1000) + 1 -- between 1 and 1000

, Random_Big = abs(cast(newid() as binary(6)) %999999)

, aGUID = newid()

;

Test it with some sample data.

-- Create Temp Table to hold our Sample Data

if object_id('tempdb..##SampleRandomized','u') is not null begin drop table ##SampleRandomized end;

create table ##SampleRandomized (

RowID int identity(1,1)

, rnd_ZeroOrOne tinyint

, rnd_Int int

);

-- Create Sample Dataset with Randomized Numbers from SQL Server

-- Insert 1000 rows of sample numbers (using sys.objects as the numerator)

insert into ##SampleRandomized (rnd_ZeroOrOne, rnd_Int)

select top 100

rnd_OneOrZero = random.Random_ZeroOrOne

, rnd_Int = random.Random_Num

from sys.objects, dbo.v_getRandomNum as random

;

select * from ##SampleRandomized

Now you can use the random number generating view by joining it to your data query. In the example above I've joined it to sys.objects simply as a means of row generation. The distribution of the random numbers will tend to be evenly distributed, given enough rows.

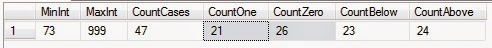

In the sample I have only pulled together 100 rows, but if we look at the number of cases that are 1, compared to the number of cases that are 0 we will see it's pretty evenly split near 50%. The same distribution is applicable to our random integer, between 1 and 1000, we find about 50% are below 500, and 50% are at or above 500. Each time you generate the random numbers you should see slightly different distributions.

select MinInt = min(rnd_Int)

, MaxInt = max(rnd_int)

, CountCases = count(1)

, CountOne = sum(case when rnd_ZeroOrOne =1 then 1 else 0 end)

, CountZero = sum(case when rnd_ZeroOrOne =0 then 1 else 0 end)

, CountBelow = sum(case when rnd_int < 500 then 1 else 0 end)

, CountAbove = sum(case when rnd_int >= 500 then 1 else 0 end)

from ##SampleRandomized Using this to break our data into a training and testing set is now a trivial matter.

-- Now we can easily break the set in half, based on Random values, decreasing bias.'

if object_id('tempdb..##FullDataset','u') is not null begin drop table ##FullDataset end;

select *

, dataset_byInt = case when rnd_int < 500 then 'train' else 'test' end

, dataset_byBit = case when rnd_ZeroOrOne = 0 then 'train' else 'test' end

into ##FullDataset

from ##SampleRandomized

Random Number Generation in R

If you plan on using R for your predictive analytics, you'll likely be using the runif command. runif stands for Random Uniform and generates a uniform distribution of random numbers between 0 and 1.

## Load the needed libraries

library(data.table)

library(RODBC)

library(ggplot2)

## Get your sample randomized data

url1 <- "Driver={SQL Server};Server=YourServerName;Database=YourDatabaseName;Trusted_Connection=Yes;"

dsr1 <- odbcDriverConnect(url1) ## Using RODBC

data1 <- data.table(sqlQuery(dsr1, paste("select * from ##FullDataset")))

## Transform rnd_ZeroOrOne into a factor

data1$rnd_ZeroOrOne <- factor(data1$rnd_ZeroOrOne)

## Random Number Generation via R

RowsInData <- nrow(data1)

data1$rnd_FromR <- runif(RowsInData)

After implementing the runif based on nrows (number of rows), we can view the results of that vector.

Now we can break up our data into different sets, and mark them as train or test.

## Create rowcounts, by random methods, for both our test and train sets.

## this can be done in compressed language with aggregate or ddply grouping

## however, this is the more readily accessible to beginners.

rCountBelow <- data.table(provider = 'R', dataset = 'train', cases = nrow(subset(data1, rnd_FromR < .5)))

rCountAbove <- data.table(provider = 'R', dataset = 'test', cases = nrow(subset(data1, rnd_FromR >= .5)))

sCountBelow <- data.table(provider = 'SQL', dataset = 'train', cases = nrow(subset(data1, rnd_Int < 500)))

sCountAbove <- data.table(provider = 'SQL', dataset = 'test', cases = nrow(subset(data1, rnd_Int >= 500)))

## union all the row counts

caseCounts <- rbind(rCountBelow, rCountAbove, sCountBelow, sCountAbove)

A very uniform distribution indeed, bias reduced through random numbers.

To demonstrate visually, here's a gpplot of the distribution differences (there is none) between our SQL Server random numbers and the random numbers generated in R.

## View the distribution of Random row selection from R

ggplot(data = caseCounts,

aes(x = factor(provider), y=cases, fill = factor(dataset))) +

geom_bar(stat="identity") +

scale_y_continuous(limits=c(0,100))

Enjoy,

-P

-P

No comments:

Post a Comment