How to generate a wordcloud image from a website URL in just three lines of R code.

How to generate a wordcloud image from a website URL in just three lines of R code.I recently found a very groovy wordcloud package for R, written by Ian Fellows, that I then extended to pass in a URL. Once you've got everything setup, that I'll outline here, you can then generate a wordcloud on the fly by simply pasting in the web address. You should have a basic understanding of loading R packages in order to set this up.

Required Libraries

You'll need to install the following libraries;

library(data.table) # Used in filter and subsetting the words.

library(httr) # used to access the webpage URL

library(XML) # Used to extract the body tag content from the URL.

library(tm) # Text mining features for stopword and punctuation removal

library(wordcloud) # Used to generate the wordcloud

library(RColorBrewer) # Coloring the wordcloud

Create or download pageCloudify.R file

pageCloudify.R is my solution script file for loading the URL, scraping its contents, and generating the wordcloud image. You can paste the script into a new file called pageCloudify.R or simply download it from here.

1: ## pageCloudify.R

2: ## Creates a wordcloud for one or more webpage URLs.

3: ## Most stopwords (ie. "the", "and") are removed by default.

4: ##---------------------------------

5: ## Function: pageCloudify()

6: ## Parameters:

7: # TheURLS: a string or vector of strings containing valid URL(s) http://starwars.wikia.com/wiki/Star_Wars

8: # freqReq: an integer value representing the minimum number of word repetition to be included in the cloud.

9: # toPNG: Output wordClouds to PNG files. The default is False which sends the wordCloud to the screen device.

10: ## Invalid URL: The webpage html must contain a <body> tag. This is the portion of content used

11: ## to build the word cloud.

12: ## Warnings: Warnings will be generated for clouds containing more words than can fit in the cloud.

13: ## Try increasing the freqReq parameter to decrease the number of words in the cloud.

14: ## Many english stopwords are removed by default. To view the stopwords removed by the wordCloud package:

15: ## stopwords(kind = "en")

16: ## Libraries required: They will be attempted to be loaded for you when you call the source R file.

17: ## However, they do need to be already installed.

18: # library(data.table) # Used in filter and subsetting the words.

19: # library(httr) # used to access the webpage URL

20: # library(XML) # Used to extract the body tag content from the URL.

21: # library(tm) # Text mining features for stopword and punctuation removal

22: # library(wordcloud) # Used to generate the wordcloud

23: # library(RColorBrewer) # Coloring the wordcloud

24: ##### Examples #####

25: ## Copy the pageCloudify.R file to your working directory.

26: ## Set your working directory.

27: # setwd("c:/personal/r")

28: ## Load the pageCloudify.R source file.

29: # source("pageCloudify.R")

30: ## Example usage with single URL

31: # pageCloudify(TheURLs="http://en.wikipedia.org/wiki/Star_Wars", freqReq = 15, toPNG = F)

32: ## Example usage with multiple URLs, to PNG files

33: ## each png file will go to the working directory

34: ## each png file will be named mywordcloud_X.png where X is an ordinal integer from 1 to the number of URLs.

35: # TheURLs <- c("http://incrediblemouse.blogspot.com/2014/11/sql-server-r-and-facebook-sentiment.html",

36: # "http://incrediblemouse.blogspot.com/2014/11/sql-server-predictive-analytics.html",

37: # "http://incrediblemouse.blogspot.com/2014/11/sql-server-r-and-logistic-regression.html",

38: # "http://incrediblemouse.blogspot.com/2014/11/sql-server-and-univariate-linear.html")

39: # pageCloudify(TheURLs, freqReq = 5, toPNG = T)

40: library(data.table)

41: library(httr)

42: library(XML)

43: library(wordcloud)

44: library(tm)

45: library(RColorBrewer)

46: pageCloudify <- function(theURLs, freqReq = 10, toPNG=F){

47: y = 1

48: for(x in theURLs){

49: ## Using XML to pull the html

50: parsedHTML2 <- htmlTreeParse(x, useInternalNodes=T)

51: PostEntry <- xpathSApply(parsedHTML2, "//body",simplify=T)

52: ## Munging for Wordcloud

53: z <- data.table(xmlSApply(PostEntry[[1]], xmlValue))

54: z <- data.table(words = tolower(gsub("\n", " ", z$V1, fixed = TRUE)))

55: z <- data.table(words = tolower(gsub("[^[:alnum:] ]", "", z$words)))

56: z <- data.table(words = unlist(strsplit(z$words," ")))

57: z$words <- removeWords(z$words, stopwords(kind = "en"))

58: z <- subset(z, words != "" | words != " ")

59: z <- data.table(words = removePunctuation(z$words), int = 1)

60: z <- z[,(wordcount = sum(int)), by=words]

61: z <- subset(z, nzchar(z$words) == T & nchar(z$words) > 2 & nchar(z$words) < 26 & z$V1 > freqReq)

62: ## Word Cloud Color Palette

63: pal <- brewer.pal(6,"Dark2")

64: ##pal <- pal[-(1)]

65: if (toPNG) {

66: pngFileName = paste("mywordcloud_", as.character(y), ".png", sep="")

67: png(file=pngFileName, bg="transparent")

68: wordcloud(z$words, z$V1, scale = c(4,.8), min.freq=2, random.color = T, colors=pal)

69: dev.off()

70: } else {

71: wordcloud(z$words, z$V1, scale = c(4,.8), min.freq=2, random.color = T, colors=pal)

72: }

73: y = y + 1

74: }

The script provides a new function called pageCloudify()

Parameters:

theURLS: a string or vector of strings containing valid URL(s)

freqReq: an integer value representing the minimum number of word repetition to be included in the cloud.

toPNG: Output wordclouds to PNG files. The default is False which sends the wordCloud to the screen device.

Plot a WordCloud

Now that've got your packages installed, and the above script saved to a memorable location, you can now generate a wordcloud in 3 lines of code. Be sure to change your working directory to the same location where you stored the pageCloudify.R file.

1: setwd("c:/personal/r") # Change this to the stored location of pageCloudify.R

2: source("pageCloudify.R")

3: pageCloudify(theURLs="http://en.wikipedia.org/wiki/Star_Wars", freqReq = 25, toPNG = F)

You can even pass in multiple URLs all at once, and output them to PNG files.

1: ## Example usage with multiple URLs, to PNG files

2: ## each png file will go to the working directory

3: ## each png file will be named mywordcloud_X.png where X is an ordinal integer from 1 to the number of URLs.

4: theURLs <- c("http://incrediblemouse.blogspot.com/2014/11/sql-server-r-and-facebook-sentiment.html",

5: "http://incrediblemouse.blogspot.com/2014/11/sql-server-predictive-analytics.html",

6: "http://incrediblemouse.blogspot.com/2014/11/sql-server-r-and-logistic-regression.html",

7: "http://incrediblemouse.blogspot.com/2014/11/sql-server-and-univariate-linear.html")

8: pageCloudify(theURLs, freqReq = 5, toPNG = T)

Modify the function for even more cool features, likes different color palettes. Simply pass a different brewer.pal color palette name.

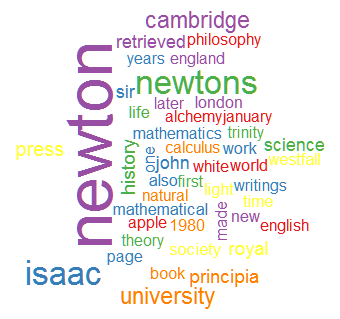

The first cloud is from Issac Newton's wikipedia page. I mean, who's a better candidate for a colorful wordcloud than the man who figured out that light contains all the visible colors.

While this is awesome, from a programmer standpoint, there are great sites where you can simply paste in some text, and generate highly artistic wordclouds - You might be interested in wordle.net

Enjoy!

-P