Apparently, if you're talking about congress, you're probably not using flowery adjectives.

I love the integration of SQL Server and R for data analysis and storage. I often jump back and forth between platforms depending on what I want to accomplish and the efficiency at which I know I can get the job done. Granting that you can perform all of this analysis, and to a much larger statistical extent, inside of R, I still lean on SQL Server for my data storage engine.

I demonstrate here the use of R for accessing Facebook public posts, SQL Server for storage, manipulation, and sentiment analysis of that data. Again, you could achieve it all in R, but my background is in data architecture, so I have a penchant for storing data responsibly for future needs (I wish I did the same with my money).

The above chart, aggregated in SQL Server and prettied up with Excel, scored word sentiment found in 200 recent public facebook posts, for each search term category.

Software Needed

R can be downloaded from

CRAN and R Studio can be downloaded from

RStudio.

SQL Server Express 2014, capable of everything here, can be downloaded from

Microsoft. I highly recommend the version: Express with Advanced Services (SQLEXPRADV), since it comes with full text search as well (not needed here, but is a very efficient text search engine you might one day want).

The process of the whole project is as follows;

1.) Obtain your facebook developer API access token.

2.) Configure your R environment for access to the API

3.) Prepare a SQL Server table for facebook data.

4.) Retrieve data from Facebook in R and save it to SQL Server.

5.) Prepare a SQL Server function for word parsing.

6.) Create a WordScorer lookup table.

7.) Score the facebook data, by search term, providing the average sentiment score for each.

Obtain your facebook developer API access token

I show here the basic configuration needed. The Site URL may need to be slightly different, but we'll get to that shortly.

Make note of your App ID and App Secret. You'll need them both for use in R.

Configure your R environment for access to the API

You'll need to install and load a few R libraries ahead of time. These will cover the bases for rFacebook, OAuth, SQL Server access, and even future plotting for commands we will using here. Some are just useful for extended features we'll not be using here, but that you should have on hand. All of the facebook API interaction in R is done via the awesome

Rfacebook library, hosted on

Cran and written by Pablo Barbera.

library(Rfacebook);

library(data.table);

library(RCurl);

library(rjson);

library(RODBC);

library(ROAuth);

library(ggplot2);

## Also set your working directory.

## Your Facebook OAuth credentials will be saved

## here for future use.

setwd("C:/Personal/R")

Now you'll authenticate your OAuth connection using your App ID and App Secret from the facebook application basic settings page, as noted previously.

## This is only performed once.

fb_oauth <- fbOAuth("YourAppIDHere", "YourAppSecretHere", extended_permissions = TRUE)

save(fb_oauth, file="fb_oauth")

## Now we only need to do this for all future OAuth loading.

load("fb_oauth")

When you run the fbOAuth, your R console will provide further instructions. These instructions contain the URL you should apply to your facebook application settings for the Site URL.

Copy and paste into Site URL on Facebook App Settings: http://localhost:1410/

When done, press any key to continue...

After applying the Site URL, press any key in the console, as the instructions state. Your web browser will open, access facebook, and tell you when it is completed. Afterwards, close your browser.

That's the one-time setup (until the token expires). From this point forward, you'll only need to load("fb_oauth")

Prepare a SQL Server table for your facebook data.

In SQL Server we'll create a table to hold all of the variables the

Rfacebook function searchFacebook returns. We could simply pass the sqlSave command and let

RODBC create our table for us, however, it's not all that savvy when it comes to data types. It tends to take the path of least resistance, making most numbers a float, and all character vectors as varchar(255). Since one of the vectors in the results, [message], containing the contents of the facebook post can contain a great number of characters,

RODBC will truncate this data before inserting it. However, if we specify the table data types more explicitly in advance, we can get greater control. I've chosen a varchar(4000) to hold the message body. Some posts can even be longer, but for this simplistic sentiment analysis it's plenty large enough, even if we get some truncation. I would have preferred to use SQL Server's [text] data type, but was unable to get

RODBC to stop complaining about it. I've tabled that for another rainy day.

It's also important to note that your table should mirror, column for column, what you plan on having in your R data table. If you want to insert records to an existing table with additional columns, you should really insert it to a temporary staging table, and migrate the data via trigger or otherwise. Yet another topic for a different rainy day.

create table dbo.facebooksearchresults (

from_id bigint null

, from_name varchar(255) null

, [message] varchar(2000) null

, created_time varchar(255) null

, [type] varchar(255) null

, link varchar(255) null

, id varchar(255) null

, likes_count integer null

, comments_count integer null

, shares_count integer null

, searchstring varchar(255) null

, dateadded date

);

Retrieve data from Facebook in R and save it to SQL Server.

Now, in R, we can utilize the searchFacebook function for specific terms, and save the results back to SQL Server. I've repeated my search (and data save), pulling down 200 posts, for each search term I was interested in. The example below searches for posts containing the term ebola as an example. You might consider a vector of terms, and looping over them, but for simplicity and training, I've left that out of this article.

1: ## Search Facebook Public Posts, and save data to SQL Server.

2: searchString = "ebola"

3: data <- searchFacebook(string=searchString, token=fb_oauth, n = 200, since = NULL, until = NULL)

4: ## Convert to a data table.

5: datatbl <- data.table(data)

6: ## Add a new column to our table with the search string.

7: ## This field will allow us to append this data to SQL Server

8: ## and can act as the key to filtering for this specific search.

9: ## Now we can search multiple terms, and keep loading data.

10: datatbl$searchstring <- searchString

11: ## Add the dateadded, in case you want to compare a search on one day, to another.

12: datatbl$dateadded <- as.character(Sys.Date())

The last two fields in our SQL Server table, searchString and dateadded, are not returned by the searchFacebook function, but we'll add those to our R data table so they can be stored with our results.

Your console should indicate that x number of posts were retrieved. We can now save the data to our SQL Server table

1: ## Connect to your SQL Server.

2: url1 <- "Driver={SQL Server};Server=YourServerAndInstanceName;Database=YourDatabaseName;Trusted_Connection=Yes;"

3: localdb <- odbcDriverConnect(url1)

4:

5: ## For very specific colnames and data type mapping, roll your own.

6: varTypes <- c("bigint", "varchar", "varchar", "varchar", "varchar",

7: "varchar", "varchar", "integer", "integer", "integer", "varchar", "date")

8: names(varTypes) <- c("from_id", "from_name", "message", "created_time",

9: "type", "link", "id", "likes_count", "comments_count",

10: "shares_count", "searchstring", "dateadded")

11:

12: ## Save the data back to SQL Server.

13: sqlSave(localdb, datatbl, varTypes=varTypes, tablename ="facebookSearchResults", append = T, rownames = F)

Prepare a SQL Server function for word parsing.

In order to parse the large [message] post contents into individual words, we need to delineate the words by spaces. There are a great number of ways to splice strings into words, but I have a favorite function for SQL Server I found a long time ago, that I still use today. It's efficient mostly because of its use of an Itzik Ben-Gan style of exponentially recursive CTEs.

One time process: Create the following function, enabling us to split strings.

USE [YourDatabaseName]

GO

create function [dbo].[delimitedsplit8k]

--===== define i/o parameters

(@pstring varchar(8000), @pdelimiter char(1))

--warning!!! do not use max data-types here! it will kill performance!

returns table with schemabinding as

return

--===== "inline" cte driven "tally table" produces values from 1 up to 10,000...

-- enough to cover varchar(8000)

with e1(n) as (

select 1 union all select 1 union all select 1 union all

select 1 union all select 1 union all select 1 union all

select 1 union all select 1 union all select 1 union all select 1

), --10e+1 or 10 rows

e2(n) as (select 1 from e1 a, e1 b), --10e+2 or 100 rows

e4(n) as (select 1 from e2 a, e2 b), --10e+4 or 10,000 rows max

ctetally(n) as (--==== this provides the "base" cte and limits the number of rows right up front

-- for both a performance gain and prevention of accidental "overruns"

select top (isnull(datalength(@pstring),0)) row_number() over (order by (select null)) from e4

),

ctestart(n1) as (--==== this returns n+1 (starting position of each "element" just once for each delimiter)

select 1 union all

select t.n+1 from ctetally t where substring(@pstring,t.n,1) = @pdelimiter

),

ctelen(n1,l1) as(--==== return start and length (for use in substring)

select s.n1,

isnull(nullif(charindex(@pdelimiter,@pstring,s.n1),0)-s.n1,8000)

from ctestart s

)

--===== do the actual split. the isnull/nullif combo handles the length for the final element when no delimiter is found.

select itemnumber = row_number() over(order by l.n1),

item = substring(@pstring, l.n1, l.l1)

from ctelen l

;

Create a WordScorer lookup table

We'll need one final ingredient before we can start scoring the words by search term. We need a lookup table that assigns a sentiment value to words. Naturally we're not going to use the whole dictionary, but we can get started with a pretty straight-forward approach.

Not every possible word needs a score. We need to ignore "a", "and", "the", and all those other words out that don't generally indicate a users sentiment, like the word "chair". The word chair doesn't give us any idea what kind of mood the user is in when they wrote it, or the sentiment behind those words.

My WordScorer table has 2477 words commonly used to score sentiment. They include adjectives and verbs that can generally be considered to be positive or negative influencers on sentiment. For instance, "abusive", "lol", and "jackass".

You'll need to create the following table and

import this data (currently in google doc spreadsheet format) into the table.

create table dbo.wordscorer(

wordid int identity(1,1) not null

, word varchar(50) not null

, score int not null

, scoreplusone int not null

constraint pk_wordscorer primary key clustered (wordid asc)

);

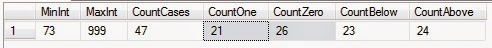

Score the facebook data, by search term, providing the average sentiment score for each.

Finally, the peas of resistance! We can now query our posts, parse the words, score them, and report the average sentiment for each search string.

1: with TheWords as (

2: select r.SearchString, z.item

3: from dbo.facebooksearchresults r

4: cross apply (

5: select *

6: from dbo.DelimitedSplit8K (r.[message],'')

7: ) z

8: where r.[message] is not null

9: )

10: , TheWordsScored as (

11: select SearchString, item, w.word, w.score

12: from TheWords i

13: join [dbo].[WordScorer] w

14: on i.item = w.word

15: )

16: select SearchString, AverageSentimentScore = avg(cast(score as float))

17: from TheWordsScored

18: group by searchString

19: order by avg(cast(score as float)) desc

Additional Data Considerations

There is more analysis and cleaning that should be considered when performing sentiment analysis on these posts. First of all, the search terms are searched individually, and never combined. Therefore, a two word search term will yield inconsistent results. After retrieving the data, you'll have to filter that set where both are found, eliminating most if not all of the results you pulled. So, in my search terms, "Taylor Swift" will return posts that merely mention the word swift. This means the sentiment result for the two word term is erroneous, unless filtered where both are present.

Secondly, the search is performed by facebook over the message content and the comment content. So if you're looking for just the message contents containing the terms, you'll again have to filter for this on the backend after retrieval.

Where to from here

At this point, I'd be very interested in automating a regular pull of the data to see how that sentiment moves and changes over time. Facebook doesn't let you go very far back in time, even with the date parameters. It must be nice to be Facebook and have all this data in their hands already. That's my problem - I've got more side projects of personal interest than I know what to do with.

Enjoy,

-P

Have a great and fantastically wonderfully cheerful day!

(+9 positive sentiment bagged right there)

I am turned into a sort of machine for observing facts and grinding out conclusions.

Charles Darwin